- Introduction

- Implementation Approach

- Implementation Mechanisms

- Conclusion

- Resources

Introduction

I presented at iOSDC Japan 2025 with the title “Sign Language Gesture Detection and Translation – The Possibilities and Limitations of Hand Tracking“.

In this session, I deeply explore the technical implementation of real-time sign language gesture recognition using visionOS hand tracking features, examining both its possibilities and practical constraints.

The slides and information used in the presentation are available here:

- Slides (Download from TestFlight)

- HandGestureKit

Implementation Approach

Gesture Detection Implementation Approach

The gesture detection system presented in this session is implemented in three steps:

1. Initialize the Hand Tracking System

- Request permissions with ARKitSession: Requires adding usage purpose to Info.plist

- Enable .hand with SpatialTrackingSession: Leveraging new features in visionOS 2.0

2. Retrieve Hand Joint Position and Orientation Information

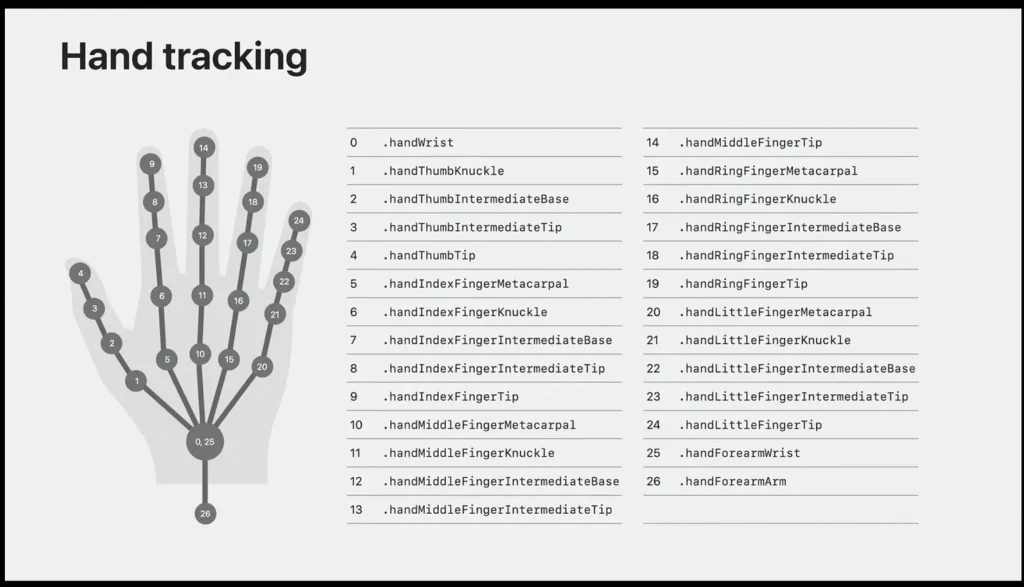

- Select necessary joints from HandSkeleton: Choose from 27 joints as needed

- Set up AnchorEntity for real-time joint tracking: Automatically updates joint positions

- Access Entity joint positions and orientations via Components: Data access using ECS pattern

3. Determine Gestures from Joint Information

- Determine whether joint positions and orientations match gesture conditions

- Protocol-oriented design makes adding new gestures easy

Development Environment and Prerequisites

Required Environment

The implementation presented in this session requires the following development environment:

- Xcode: 16.2 or later

- visionOS: 2.0 or later (AnchorEntity was introduced in visionOS 2.0)

- Swift: 6.0

- Device: Apple Vision Pro (Hand tracking is limited in the simulator)

Important Dependencies

import ARKit // SpatialTrackingSession

import RealityKit // AnchorEntity, Entity-Component-System

import SwiftUI // UI constructionNote: AnchorEntity is only available in visionOS 2.0 and later. Alternative implementation is required for visionOS 1.x.

Session Overview

What You’ll Learn

This session comprehensively covers the following aspects of building a gesture recognition system for visionOS:

- Hand Tracking Fundamentals: Understanding visionOS SpatialTrackingSession and AnchorEntity

- Gesture Detection Architecture: Building a flexible, protocol-oriented gesture detection system

- Practical Implementation: Implementing sign language gestures with validation

- Performance Optimization: Real-time gesture processing strategies at 90Hz

- Limitations and Workarounds: Addressing spatial hand tracking challenges

Target Audience

This session is aimed at iOS/visionOS developers who:

- Have basic knowledge of SwiftUI and RealityKit

- Are interested in spatial computing and gesture-based interfaces

- Want to understand practical aspects of hand tracking implementation

- Are interested in the future of human-computer interaction

Implementation Mechanisms

How HandSkeleton Works

What is HandSkeleton?

A hand skeleton model provided by ARKit, consisting of 27 joint points.

This enables high-precision tracking of hand shapes.

Reference: https://developer.apple.com/videos/play/wwdc2023/10082/?time=970

Available Joint Information

- Wrist: Hand reference point

- Finger joints:

- Metacarpal

- Proximal phalanx

- Intermediate phalanx

- Distal phalanx

- Tip

- Forearm: Determines arm orientation

Data Available from Each Joint

// Position information (SIMD3<Float>)

let position = joint.position

// Orientation information (simd_quatf)

let orientation = joint.orientation

// Relative position from parent joint

let relativePosition = joint.relativeTransformCoordinate System

- Right-handed coordinate system: Right:+X, Up:+Y, Forward:+Z

- Units: Meters

- Origin: Based on device initial position

RealityKit and ECS (Entity-Component-System)

ECS Architecture Basics

RealityKit is a 3D rendering framework that adopts the ECS pattern:

1. Entity

Represents objects in 3D space.

Spheres, text, hands – all 3D elements are Entities.

2. Component

Adds functionality to Entities.

Appearance (ModelComponent), movement (Transform), physics (PhysicsBodyComponent), etc.

3. System

Processes Entities with specific Components every frame.

The core of the game loop.

Basic RealityView Structure

RealityView { content in

// Create root Entity and add to scene

let rootEntity = Entity()

content.add(rootEntity)

// Create hand entity container

let handEntitiesContainerEntity = Entity()

rootEntity.addChild(handEntitiesContainerEntity)

}Enabling Hand Tracking with SpatialTrackingSession

Implementation using AnchorEntity introduced in visionOS 2.0:

// Enable hand tracking

let session = SpatialTrackingSession()

let config = SpatialTrackingSession.Configuration(tracking: [.hand])

await session.run(config)

// Auto-track joints with AnchorEntity

let anchorEntity = AnchorEntity(

.hand(.left, location: .palm),

trackingMode: .predicted // Reduce tracking latency with prediction

)

// Auto-tracking starts just by adding

handEntitiesContainerEntity.addChild(anchorEntity)Creating and Placing Joint Markers

// Create Entity for sphere marker

let sphere = ModelEntity(

mesh: .generateSphere(radius: 0.005),

materials: [UnlitMaterial(color: .yellow)]

)

// Add to AnchorEntity (follows joint)

anchorEntity.addChild(sphere)HandGestureTrackingSystem Implementation

Create a custom System to monitor hand state every frame:

1. Get Hand Entities with EntityQuery

let handEntities = context.scene.performQuery(

EntityQuery(where: .has(HandTrackingComponent.self))

)2. Extract Information from HandTrackingComponent

for entity in handEntities {

if let component = entity.components[HandTrackingComponent.self] {

let chirality = component.chirality // .left or .right

let handSkeleton = component.handSkeleton

}

}3. Gesture Detection Processing

let detectedGestures = GestureDetector.detectGestures(

from: handTrackingComponents,

targetGestures: targetGestures

)This System‘s update(context:) method is automatically called every frame, retrieving necessary information from SceneUpdateContext for processing.

Technical Architecture

Repository Structure

The project consists of three main packages, each serving a specific role in the gesture recognition pipeline:

Slidys/

├── Packages/

│ ├── iOSDC2025Slide/ # Presentation built with Slidys framework

│ ├── HandGestureKit/ # Core gesture detection library (OSS-ready)

│ └── HandGesturePackage/ # Application-specific implementationHandGestureKit: Core Library

HandGestureKit functions as the foundation layer for gesture recognition.

It’s designed as a standalone open-source library that can be integrated into any visionOS project.

Key Components

1. Gesture Data Model

The library provides comprehensive data structures for hand tracking:

public struct SingleHandGestureData {

public let handTrackingComponent: HandTrackingComponent

public let handKind: HandKind

// Threshold settings for gesture detection accuracy

public let angleToleranceRadians: Float

public let distanceThreshold: Float

public let directionToleranceRadians: Float

// Pre-computed values for performance optimization

private let palmNormal: SIMD3<Float>

private let forearmDirection: SIMD3<Float>

private let wristPosition: SIMD3<Float>

private let isArmExtended: Bool

}This struct encapsulates all necessary hand tracking data and minimizes runtime overhead by pre-computing frequently used values.

2. Protocol-Oriented Design

The gesture system is built on a hierarchical protocol structure:

// Base protocol for all gestures

public protocol BaseGestureProtocol {

var id: String { get }

var gestureName: String { get }

var priority: Int { get }

var gestureType: GestureType { get }

}

// Single-hand gesture protocol with rich default implementations

public protocol SingleHandGestureProtocol: BaseGestureProtocol {

func matches(_ gestureData: SingleHandGestureData) -> Bool

// Finger state requirements

func requiresFingersStraight(_ fingers: [FingerType]) -> Bool

func requiresFingersBent(_ fingers: [FingerType]) -> Bool

func requiresFingerPointing(_ finger: FingerType, direction: GestureDetectionDirection) -> Bool

// Palm orientation requirements

func requiresPalmFacing(_ direction: GestureDetectionDirection) -> Bool

// Arm position requirements

func requiresArmExtended() -> Bool

func requiresArmExtendedInDirection(_ direction: GestureDetectionDirection) -> Bool

}This protocol design allows easy addition of new gestures by overriding only the necessary conditions.

3. Gesture Detection Engine

The GestureDetector class evaluates registered gestures in priority order:

public class GestureDetector {

private var gestures: [BaseGestureProtocol] = []

public func detect(from handData: SingleHandGestureData) -> [BaseGestureProtocol] {

return gestures

.sorted { $0.priority < $1.priority }

.filter { gesture in

guard let singleHandGesture = gesture as? SingleHandGestureProtocol else {

return false

}

return singleHandGesture.matches(handData)

}

}

}Implementation Examples: Sign Language Gestures

1. Thumbs Up Gesture

public class ThumbsUpGesture: SingleHandGestureProtocol {

public var gestureName: String { "Thumbs Up" }

public var priority: Int { 100 }

// Requires only thumb extended

public var requiresOnlyThumbStraight: Bool { true }

// Requires thumb pointing up

public func requiresFingerPointing(_ finger: FingerType, direction: GestureDetectionDirection) -> Bool {

return finger == .thumb && direction == .up

}

}Detection Logic Details

The thumbs up gesture’s matches function leverages the protocol’s default implementation to check the following conditions:

// From SingleHandGestureProtocol default implementation

public func matches(_ gestureData: SingleHandGestureData) -> Bool {

// 1. Check if only thumb is extended

if requiresOnlyThumbStraight {

// Internally validates these conditions:

// - Thumb: isFingerStraight(.thumb) == true

// - Index: isFingerBent(.index) == true

// - Middle: isFingerBent(.middle) == true

// - Ring: isFingerBent(.ring) == true

// - Little: isFingerBent(.little) == true

guard isOnlyThumbStraight(gestureData) else { return false }

}

// 2. Check if thumb is pointing up

if requiresFingerPointing(.thumb, direction: .up) {

// Calculate angle between thumb vector and up direction

// True if within angleToleranceRadians (default: π/4)

guard gestureData.isFingerPointing(.thumb, direction: .up) else { return false }

}

return true

}Finger Bend Detection Implementation

// Detection logic in SingleHandGestureData

public func isFingerStraight(_ finger: FingerType) -> Bool {

// Get joint angles for each finger

let jointAngles = getJointAngles(for: finger)

// "Straight" if all joints bend less than threshold

return jointAngles.allSatisfy { angle in

angle < straightThreshold // Default: 30 degrees

}

}

public func isFingerBent(_ finger: FingerType) -> Bool {

// "Bent" if at least one joint bends more than threshold

let jointAngles = getJointAngles(for: finger)

return jointAngles.contains { angle in

angle > bentThreshold // Default: 60 degrees

}

}2. Peace Sign

public class PeaceSignGesture: SingleHandGestureProtocol {

public var gestureName: String { "Peace Sign" }

public var priority: Int { 90 }

// Requires only index and middle fingers extended

public var requiresOnlyIndexAndMiddleStraight: Bool { true }

// Requires palm facing forward

public func requiresPalmFacing(_ direction: GestureDetectionDirection) -> Bool {

return direction == .forward

}

}Detection Logic Details

public func matches(_ gestureData: SingleHandGestureData) -> Bool {

// 1. Check if only index and middle fingers are extended

if requiresOnlyIndexAndMiddleStraight {

// Must satisfy all conditions:

// - gestureData.isFingerStraight(.index) == true

// - gestureData.isFingerStraight(.middle) == true

// - gestureData.isFingerBent(.thumb) == true

// - gestureData.isFingerBent(.ring) == true

// - gestureData.isFingerBent(.little) == true

guard isOnlyIndexAndMiddleStraight(gestureData) else { return false }

}

// 2. Check palm orientation

if requiresPalmFacing(.forward) {

// Calculate palm normal vector and check angle with forward direction

let palmNormal = gestureData.palmNormal

let forwardVector = SIMD3<Float>(0, 0, -1) // Forward direction

let angle = acos(dot(palmNormal, forwardVector))

guard angle < directionToleranceRadians else { return false }

}

return true

}3. Prayer Gesture (Two Hands)

public class PrayerGesture: TwoHandGestureProtocol {

public var gestureName: String { "Prayer" }

public var priority: Int { 80 }

public func matches(_ leftGestureData: SingleHandGestureData, _ rightGestureData: SingleHandGestureData) -> Bool {

// Palms facing each other

let palmsFacing = arePalmsFacingEachOther(leftGestureData, rightGestureData)

// Hands are close together

let handsClose = areHandsClose(leftGestureData, rightGestureData, threshold: 0.1)

// All fingers extended

let fingersStraight = areAllFingersStraight(leftGestureData) &&

areAllFingersStraight(rightGestureData)

return palmsFacing && handsClose && fingersStraight

}

}Mechanisms for Concise Gesture Detection Implementation

Conciseness Through Protocol Default Implementations

HandGestureKit’s greatest feature is that rich protocol default implementations allow new gestures to be defined with minimal code:

// Adding new gestures is extremely simple

public class OKSignGesture: SingleHandGestureProtocol {

public var gestureName: String { "OK Sign" }

public var priority: Int { 95 }

// Declaratively define only necessary conditions

public var requiresOnlyIndexAndThumbTouching: Bool { true }

public var requiresMiddleRingLittleStraight: Bool { true }

}With just this concise definition, complex gesture detection logic is automatically applied.

Condition Combination Patterns

Commonly used finger combinations are provided as dedicated properties:

// Convenient property set

public protocol SingleHandGestureProtocol {

// Complex finger conditions (convenience properties)

var requiresAllFingersBent: Bool { get } // Fist (all fingers bent)

var requiresOnlyIndexFingerStraight: Bool { get } // Index finger only

var requiresOnlyIndexAndMiddleStraight: Bool { get } // Peace sign

var requiresOnlyThumbStraight: Bool { get } // Thumbs up

var requiresOnlyLittleFingerStraight: Bool { get } // Little finger only

// Wrist states

var requiresWristBentOutward: Bool { get } // Wrist bent outward

var requiresWristBentInward: Bool { get } // Wrist bent inward

var requiresWristStraight: Bool { get } // Wrist straight

}Validation Utilities

The GestureValidation class provides commonly used validation patterns:

public enum GestureValidation {

// Validate that only specific fingers are extended

static func validateOnlyTargetFingersStraight(

_ gestureData: SingleHandGestureData,

targetFingers: [FingerType]

) -> Bool {

for finger in FingerType.allCases {

if targetFingers.contains(finger) {

guard gestureData.isFingerStraight(finger) else { return false }

} else {

guard gestureData.isFingerBent(finger) else { return false }

}

}

return true

}

// Validate fist gesture

static func validateFistGesture(_ gestureData: SingleHandGestureData) -> Bool {

return FingerType.allCases.allSatisfy {

gestureData.isFingerBent($0)

}

}

}GestureDetector Processing Logic

Protocol Hierarchy

GestureDetector uses a hierarchical protocol design to uniformly process various types of gestures:

protocol BaseGestureProtocol {

var gestureName: String { get }

var priority: Int { get }

var gestureType: GestureType { get }

}

protocol SingleHandGestureProtocol: BaseGestureProtocol {

func matches(_ gestureData: SingleHandGestureData) -> Bool

}

protocol TwoHandsGestureProtocol: BaseGestureProtocol {

func matches(_ gestureData: HandsGestureData) -> Bool

}Detection Architecture

class GestureDetector {

// Gesture array sorted by priority

private var sortedGestures: [BaseGestureProtocol]

// Dedicated serial gesture tracker

private let serialTracker = SerialGestureTracker()

// Type-based index (for fast lookup)

private var typeIndex: [GestureType: [Int]]

}Convenient Detection Methods

SingleHandGestureData provides convenient methods for concise gesture detection:

// Convenient methods provided by SingleHandGestureData

gestureData.isFingerStraight(.index) // Is index finger extended?

gestureData.isFingerBent(.thumb) // Is thumb bent?

gestureData.isPalmFacing(.forward) // Is palm facing forward?

gestureData.areAllFingersExtended() // Are all fingers extended?

gestureData.isAllFingersBent // Is it a fist?

// Example of combining multiple conditions

guard gestureData.isFingerStraight(.index),

gestureData.isFingerStraight(.middle),

gestureData.areAllFingersBentExcept([.index, .middle])

else { return false }Gesture Detection Conditions

Four main conditions are used for gesture detection:

- Finger state: isExtended/isCurled

- Hand orientation: palmDirection

- Joint angles: angleWithParent

- Joint distances: jointToJointDistance

Detection Flow

func detectGestures(from components: [HandTrackingComponent]) -> GestureDetectionResult {

// 1. Check serial gesture timeout

if serialTracker.isTimedOut() {

serialTracker.reset()

}

// 2. Detect normal gestures in priority order

for gesture in sortedGestures {

if gesture.matches(handData) {

return [gesture.gestureName]

}

}

// 3. Update serial gesture progress

if let serial = checkSerialGesture() {

return handleSerialResult(serial)

}

}Sequential Gesture Tracking System

SerialGestureProtocol

A mechanism for detecting time-sequential gestures (like sign language):

protocol SerialGestureProtocol {

// Array of gestures to detect in sequence

var gestures: [BaseGestureProtocol] { get }

// Maximum allowed time between gestures (seconds)

var intervalSeconds: TimeInterval { get }

// Step descriptions (for UI display)

var stepDescriptions: [String] { get }

}SerialGestureTracker – State Management

- Track current gesture index

- Monitor timeout between gestures

- Reset state after timeout or completion

Detection Flow Example

// Example: "Thank you" in sign language

let arigatouGesture = SignLanguageArigatouGesture()

gestures = [

// Step 1: Initial position detection

ArigatouInitialPositionGesture(), // Both hands at same height

// Step 2: Final position detection → completed ✅

ArigatouFinalPositionGesture() // Right hand moved to upper position

]SerialGestureDetectionResult

Sequential gesture detection results have four states:

- progress: Advancing to next step

- completed: All steps completed

- timeout: Time expired

- notMatched: No match

This mechanism enables detection of dynamic gestures by dividing them into several phases.

GestureDetector: Detailed Gesture Detection Engine

GestureDetector Overview

GestureDetector is the core gesture detection engine of HandGestureKit.

This class efficiently evaluates registered gesture patterns and recognizes gestures in real-time.

Basic Usage

// 1. Initialize GestureDetector

let gestureDetector = GestureDetector()

// 2. Register gestures to recognize

gestureDetector.registerGesture(ThumbsUpGesture())

gestureDetector.registerGesture(PeaceSignGesture())

gestureDetector.registerGesture(PrayerGesture())

// 3. Detect gestures from hand data

let detectedGestures = gestureDetector.detect(from: handGestureData)

// 4. Process detection results

for gesture in detectedGestures {

print("Detected gesture: \(gesture.gestureName)")

}Internal Implementation and Design Points

1. Priority-Based Evaluation System

public class GestureDetector {

private var gestures: [BaseGestureProtocol] = []

public func detect(from handData: SingleHandGestureData) -> [BaseGestureProtocol] {

// Sort by priority (lower numbers = higher priority)

let sortedGestures = gestures.sorted { $0.priority < $1.priority }

var detectedGestures: [BaseGestureProtocol] = []

for gesture in sortedGestures {

if let singleHandGesture = gesture as? SingleHandGestureProtocol {

if singleHandGesture.matches(handData) {

detectedGestures.append(gesture)

// End processing here for exclusive gestures

if gesture.isExclusive {

break

}

}

}

}

return detectedGestures

}

}Design Points:

- Priority-based evaluation detects more specific gestures first

- Exclusive flag skips other evaluations when specific gestures are detected

- Supports cases where multiple gestures are valid simultaneously

2. Performance Optimization

// Optimization during gesture registration

public func registerGesture(_ gesture: BaseGestureProtocol) {

// Duplicate check

guard !gestures.contains(where: { $0.id == gesture.id }) else {

return

}

gestures.append(gesture)

// Pre-sort by priority to speed up detection

gestures.sort { $0.priority < $1.priority }

}

// Optimization through batch registration

public func registerGestures(_ newGestures: [BaseGestureProtocol]) {

gestures.append(contentsOf: newGestures)

gestures.sort { $0.priority < $1.priority }

}3. Debug and Logging Features

extension GestureDetector {

// Detailed log output in debug mode

public func detectWithDebugInfo(from handData: SingleHandGestureData) -> [(gesture: BaseGestureProtocol, confidence: Float)] {

var results: [(BaseGestureProtocol, Float)] = []

for gesture in gestures.sorted(by: { $0.priority < $1.priority }) {

if let singleHandGesture = gesture as? SingleHandGestureProtocol {

let confidence = singleHandGesture.confidenceScore(for: handData)

if HandGestureLogger.isDebugEnabled {

HandGestureLogger.logDebug("Gesture: \(gesture.gestureName), Confidence: \(confidence)")

}

if singleHandGesture.matches(handData) {

results.append((gesture, Float(confidence)))

}

}

}

return results

}

}AnchorEntity Integration in visionOS 2.0

Implementation using AnchorEntity introduced in visionOS 2.0:

import RealityKit

import ARKit

@MainActor

class GestureTrackingSystem: System {

private let gestureDetector = GestureDetector()

static let query = EntityQuery(where: .has(HandTrackingComponent.self))

required init(scene: Scene) {

// Register gestures during system initialization

setupGestures()

}

private func setupGestures() {

gestureDetector.registerGestures([

ThumbsUpGesture(),

PeaceSignGesture(),

OKSignGesture(),

PrayerGesture()

])

}

func update(context: SceneUpdateContext) {

for entity in context.entities(matching: Self.query, updatingSystemWhen: .rendering) {

guard let handComponent = entity.components[HandTrackingComponent.self] else {

continue

}

// Create SingleHandGestureData

let handData = SingleHandGestureData(

handTrackingComponent: handComponent,

handKind: .left // or .right

)

// Detect gestures

let detectedGestures = gestureDetector.detect(from: handData)

// Notify detection results

if !detectedGestures.isEmpty {

notifyGestureDetection(detectedGestures)

}

}

}

private func notifyGestureDetection(_ gestures: [BaseGestureProtocol]) {

let gestureNames = gestures.map { $0.gestureName }

DispatchQueue.main.async {

NotificationCenter.default.post(

name: .gestureDetected,

object: gestureNames

)

}

}

}HandGestureKit: Provided as OSS Library

HandGestureKit is published as an open-source library, free for anyone to use and improve.

Performance Optimization

1. Pre-calculation and Value Caching

Pre-calculate and cache frequently used values:

extension SingleHandGestureData {

// Calculate values during initialization

init(handTrackingComponent: HandTrackingComponent, handKind: HandKind) {

self.handTrackingComponent = handTrackingComponent

self.handKind = handKind

// Pre-calculate frequently used values

self.palmNormal = calculatePalmNormal(handTrackingComponent)

self.forearmDirection = calculateForearmDirection(handTrackingComponent)

self.wristPosition = handTrackingComponent.joint(.wrist)?.position ?? .zero

self.isArmExtended = calculateArmExtension(handTrackingComponent)

}

}2. Early Return Optimization

Check most selective conditions first:

public func matchesWithOptimization(_ gestureData: SingleHandGestureData) -> Bool {

// 1. Most selective conditions first (finger configuration)

if requiresOnlyIndexAndMiddleStraight {

guard validateOnlyTargetFingersStraight(gestureData, targetFingers: [.index, .middle])

else { return false }

}

// 2. Direction check (medium selectivity)

for direction in GestureDetectionDirection.allCases {

if requiresPalmFacing(direction) {

guard gestureData.isPalmFacing(direction) else { return false }

}

}

// 3. Individual finger direction checks (potentially high cost)

// ...other checks

return true

}3. Priority-Based Detection

Skip unnecessary checks using priority:

public func detect(from handData: SingleHandGestureData) -> BaseGestureProtocol? {

let sortedGestures = gestures.sorted { $0.priority < $1.priority }

for gesture in sortedGestures {

if let singleHandGesture = gesture as? SingleHandGestureProtocol,

singleHandGesture.matches(handData) {

return gesture // Stop at first match

}

}

return nil

}Limitations and Possibilities

Limitations of Sign Language Detection with Apple Vision Pro

1. Camera Detection Range Limitations

visionOS hand tracking has physical constraints:

- Cannot detect hands behind or beside the body: Hands outside camera field of view cannot be tracked

- Blind spots near face and behind head: Detection is difficult in these positions due to device structure

- Difficult to detect accurately when hands overlap: Occlusion reduces joint position estimation accuracy

2. Complex Hand Shape Recognition

- Interlaced finger shapes prone to misrecognition: Difficult to accurately detect complex finger crosses and combinations

- Detection accuracy of subtle hand tilts and rotations: Limited ability to distinguish fine angle differences

3. Sign Language-Specific Elements

Sign language consists of multiple elements beyond just hand shapes:

- Meaning changes with facial expressions: Facial expressions have grammatical roles in sign language, but current APIs have difficulty detecting them

- Recognition of movement speed and intensity: Important elements that change sign language meaning, but accurate detection is difficult

4. Technical Constraints

- Registering recognition patterns is challenging: Enormous pattern definitions needed to accommodate sign language diversity

- Balance with performance: Trade-off between real-time processing and accuracy

- Handling individual differences: Recognition accuracy varies with hand size and flexibility differences

- Cannot detect other person’s hands: Only the wearer’s own hands are detected (cannot read conversation partner’s sign language)

- With Enterprise API main camera access, Vision Framework could potentially enable this

- However, 3D information like HandSkeleton is unavailable, limiting to 2D image analysis with very high implementation difficulty

Still Expanding Possibilities

1. Basic Sign Language Word Recognition is Possible!

Current technology can recognize at practical levels:

- Standard expressions: Daily sign language like “thank you” and “please”

- Numbers and simple words: Finger spelling and number expressions can be recognized with relatively high accuracy

2. First Step Toward Improved Accessibility

Even if not perfect, it can provide significant value:

- Communication support between hearing-impaired and hearing individuals: Support for basic communication

- Simple communication in emergencies: As a means to quickly convey important information

- Promoting interest and understanding of sign language: Applications in sign language learning apps and interactive educational materials

3. Expectations for Future Technology Development

- Improved accuracy through hardware evolution: Higher resolution cameras, wider field of view, faster processing

- Combination with machine learning and AI: Improved pattern recognition accuracy and adaptation to individual differences

- Utilizing EyeSight: Apple Vision Pro’s EyeSight feature allows the wearer’s facial expressions to be visible externally, addressing the importance of facial expressions in sign language

Demo video

AnchorEntity implementation and HandGestureKit demo

Sign language detection demo

Conclusion

visionOS hand tracking capabilities open new possibilities for natural user interfaces.

Using frameworks like HandGestureKit, developers can more easily implement complex gesture recognition systems.

While current technology has limitations, proper design and optimization enable creation of practical and responsive gesture-based applications.

As spatial computing continues to evolve, these technologies will become more sophisticated, and by leveraging other tools like AI, I look forward to seeing more accessible and accurate tools being implemented!

Resources

- HandGestureKit (GitHub)

- Apple Developer Documentation – Hand Tracking

- visionOS Human Interface Guidelines